Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — 14 Jun, 2023

By Ian Hughes

The evolution of the metaverse is likely to build on existing infrastructure and application patterns. The experiences that have the metaverse attributes of shared, immersive, persistent, 3D virtual spaces, where humans and machines interact with one another and with data, may look similar on the surface to a user.

But for infrastructure providers, the architectures are quite varied. This report looks at four major architecture patterns and the significant differences between them.

Much of early metaverse development is emerging from the games industry, which has a particular architectural approach to multiplayer gaming. But that does not always sit well in nonconsumer environments. Installing large applications and running cloud-based servers is very different from a quick-to-access web page, and no single entity is making the architectural decisions as these applications develop. So all of these architectures and approaches will likely coexist — creating opportunities for infrastructure-related enterprises to differentiate their support for these variations, as well as for emerging hybrid approaches.

Context

Since computers started being connected, there have been many ways to organize the arrangement of processing, storage and networking. Basic terminals connected to mainframes evolved into client-server architectures. The internet and the web led to cloud computing, then to edge computing. The burden of processing and storage has swung from server to client and back again, as client technology, PCs, tablets, phones and game consoles got more powerful. This leads to more demanding use cases that need the power of servers, mainframes, the cloud and supercomputers.

Networks also have churn. Once, "dumb" terminals sent characters to the mainframe in bursts. Now, internet streaming services deliver masses of rich data to mobile devices connected to 5G, and industrial internet of things instrumentation is collating and streaming high-frequency status data to edge and cloud servers. The question is how the metaverse will impact the available infrastructure, and the path to it.

Architecture patterns

The four main metaverse architectural patterns are not the only ones, and hybrids are plentiful. On the surface, the user experiences will be similar, but developers' architectural choices will impact infrastructure as things scale. These four approaches — a typical game, a web application, cloud streaming and peer-to-peer networking — can be used to deliver a virtual world metaverse application.

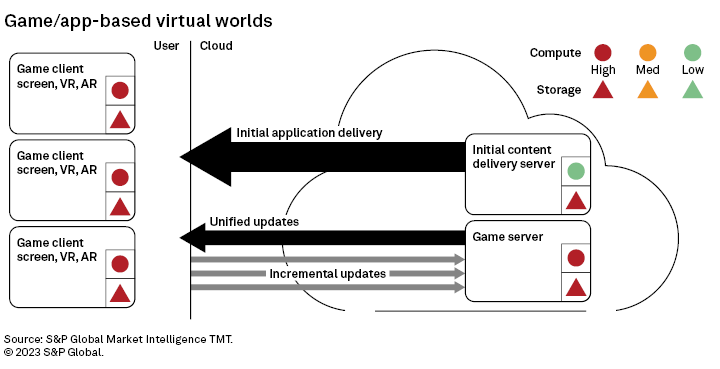

Most online multiplayer games like Xbox, PS5 or a PC will be based on the traditional architecture approach. A user has a high-performing device with its own processing, storage and network connection. The game, or virtual world environment, is delivered to the client machine as a whole application, with up to 100 GB of data to install, and the game provider must deliver that content, a large amount of data, on demand.

Most online games deliver constant updates and patches over the network. The game client can be using a standard screen, or have other virtual or augmented reality experiences, but the form factor doesn't matter — these are high-spec clients with storage and compute. To operate as a multiuser environment, a server is needed — in this case, in the cloud.

The server acts as the single source of truth for the users' shared experience. As each client app does something, say move an avatar, it uses its own app processing. Then that small amount of data (e.g., an x,y,z coordinate) is sent to the server, which redistributes it out to all the other clients to render that effect in their applications.

The game server is usually the same application (with some server-specific functions) as that running on each client, so it needs a lot of processing and storage. The speed of capturing and redistributing user input impacts the experience, so low-latency communication is essential for apps like high-speed gaming and esports. For others, like office meetings, it is more of a nice to have.

The client's processing power can fill in blanks — if a virtual vehicle is traveling in a certain direction at 50 mph, the next frame generated can interpolate and smooth out lost or delayed updates with the x,y,z coordinates coming from the server. Clients can also perform complex operations from small amounts of data. For example, one client triggers an avatar's dance animation, but only sends the server a reference (trigger dance 42). The other clients receive message 42 and play the same animation. Each animation may not start at the same time, but each user will see the same effect.

Our diagram represents the server running in the cloud. Often, in a more decentralized self-hosted approach, one of the clients is the remote server for everyone else. Typically, these architectures only cope with 10s or 100s of clients at a time in a single instance of an environment. Multiple servers might cover a wider environment or multiple copies of the same environment run for multiple clusters of people. Large client installs, often with a client app requiring specific access to network ports, are a significant barrier to entry for many enterprise IT organizations. Having to install many different applications can be a barrier to entry for consumers, too.

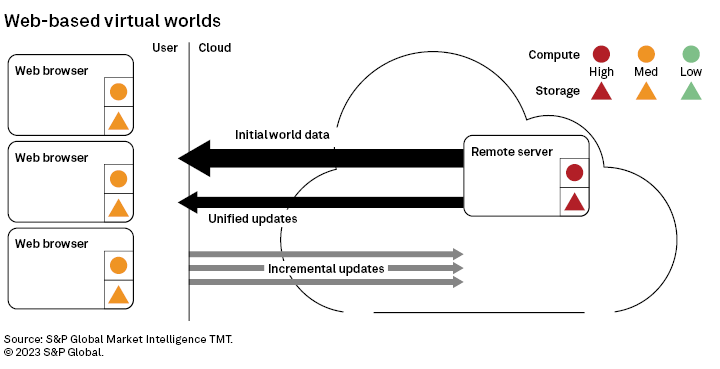

Web-based approaches to virtual worlds have evolved, with common libraries such as Babylon.js and WebGL to manipulate 3D content in the browser. Early web applications used installable plugins to change the browser's capability. But the development of common standards and the security problems caused by plugin architecture caused these to be phased out. Web-based approaches represent a cut-down version of the game application pattern. A remote server holds the entire state of a persistent environment and acts as the source of truth for clients.

On accessing the environment, a web browser client (with potentially less storage and compute power than previous examples) has some setup and world data sent to it. The browser still holds a rendering of that data, but it is typically lower quality than a fully installed client. Each client sends the changes it is making in the world — such as moving, interacting or editing in the environment — and the server keeps and redistributes them. These apps can use some techniques of full game applications with event messaging and interpolation. Access through a browser makes it more IT-policy-friendly and makes hopping from experience to experience easier for the consumer.

The server still has a limiting factor in the I/O, but fewer messages are flowing than for a high-end game client coping with more concurrent users. With a web application, expectations for network latency may be lower, due to experiences being created at a lower fidelity. The challenge is that any application that is a URL can get very high peak traffic due to viral uptake, unlike a game with a known installed base. While augmented reality and VR may be user features, this is primarily down to the web browser's support on those devices.

This shows cloud streaming (often called cloud gaming). This is a superset of the game client pattern. Here, the high compute/high-storage game client runs in the cloud on a virtual instance of the game console/PC that exists just for that user's session. Each virtualized client still talks to a server. The user's client may be a simple screen or web browser, and other applications may be virtual or augmented reality. Most of the benefit of cloud streaming comes from the user's client having to do very little work. VR and AR will have more local processing and storage complexity.

The basic client only receives frame after frame of visual and audio information from a cloud server, similar to a streaming movie. But a movie is always the same sequence of frames, whereas each frame generated by a virtual game app is unique to the user. A cloud rendering app, acting as a virtual screen for the virtual game application, will process and send the frame to the user's client. The user will have performed an action on a keyboard or joypad. That action then travels to the cloud-based virtual client to be acted on by the client, sent to the server and redistributed to the other clients, while the initiating client renders the next frame and sends it to the user.

That happens 30-60 times a second for every client, a very different balance of data flow compared to game- or app-based virtual worlds. The advantage is that the user can access the experience as simply as web-based virtual worlds but with a traditional game client's rendering quality. An existing game client can be deployed into cloud rendering and even mix and match with the full client installs, making it very versatile. The downside is potential latency issues stacking up. A user's input must now travel all the way to the cloud instance, not the short distance to a local PC or console, before being processed. Then an entire video frame must be returned constantly back over the same network.

The roundtrip makes that much slower than local installs. Some applications can build that in if the delay is known, but this complex extra requirement removes the portability benefits of the same client running in multiple architectures. Cloud streaming also requires good infrastructure and bandwidth to the user location. We previously described the high-end requirements in engineering and simulation for the industrial metaverse sphere that are suited to this server-side rendering approach.

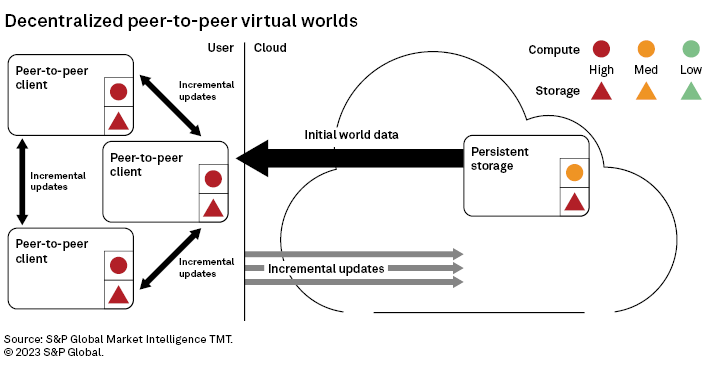

The fourth example, peer-to-peer networking, varies the most from the other three. A server still maintains the persistent state of an environment — it is there if no one is using it, but available as soon as needed — but the difference is that each client, likely a high compute/storage device, has a more equal role in sharing state changes. The peer-to-peer may be local or global depending on the app. Peer data may be shared in a round-robin, passing from one to the next, or via pub/sub with changes broadcast to all interested parties.

Some variants allow one client to assume the main server role to wrangle requests. If that client leaves, the duties hand off to a client that is present. Clients may end up with installed apps, but that's more a variant of the web client example. The relatively local networking between peers removes any cloud or server bottlenecks and roundtrip requirements. A variety of peer-to-peer approaches using protocols like WebRTC exist in video and audio communication, where a server state is not required, merely used to broker the initial connections.

Hybrids

Our four patterns are generalized examples. In hybrid cases, a web virtual world may also engage with some cloud-based rendering. Peer-to-peer may be used for a group chat, with a traditional game server architecture for visualizations.

These examples consider the network as a single, unaltering service that takes the entirety of a message, and the application has little more to do with it. As the requirements for real-time, low-latency communication increase, so does the need to give apps more ways to express requirements and engage with the network (i.e., this signal requires low latency this time).

Open-source network/edge projects

At the recent Open Metaverse Summit, the Linux Foundation discussed how developers would drive the need to improve network and edge infrastructure to support the metaverse. With the need for low-latency responses, less than 10 milliseconds for a single participant and with fiber's speed of light is a signal covering around 500 miles to get to a datacenter before any processing happens. Hence the need for more edge-based processing and a wider distributed computing model requirement.

The CAMARA project is a telco global API alliance to open access to the telecommunication layer for developers. The Akraino project provided blueprints and a software stack for edge computing management, including a specific example of an integrated AR/VR approach. These open-source organizations have joined forces to boost the edge API integration that will be needed as the metaverse develops.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

451 Research is part of S&P Global Market Intelligence. For more about 451 Research, please contact 451ClientServices@spglobal.com.