Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — 21 Sep, 2023

By Jean Atelsek and Yulitza Peraza

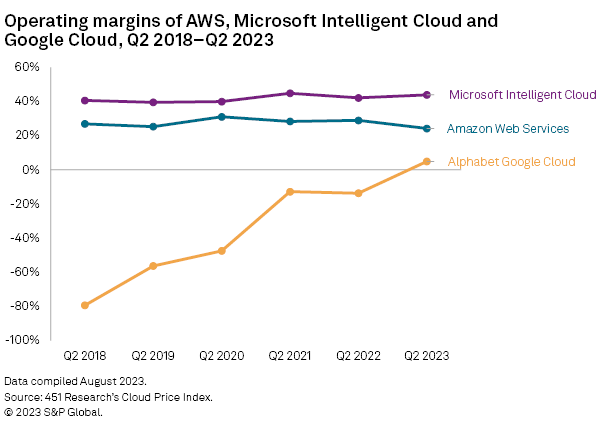

Financial results for the second quarter show hyperscaler parent companies Amazon.com Inc., Microsoft Corp. and Alphabet Inc. navigating the pervasive market opportunity brought by the generative AI frenzy. All three are forging synergistic partnerships with AI startups to secure access to intellectual property and provide compute capacity — presumably at a discount — for model training and tuning. Meanwhile, Google LLC is reasserting its claim to AI leadership as the operating margin of its Google Cloud unit has moved into positive territory.

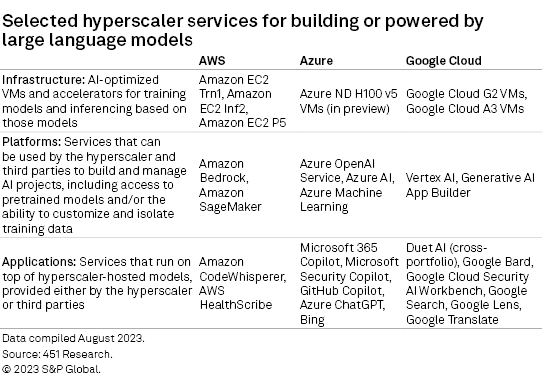

Cloud hyperscalers are among the few companies with access to the huge amounts of compute power required to train the large language models (LLMs) underlying generative AI applications such as ChatGPT and DALL-E 2. From this position, they can be kingmakers for software startups developing and refining their own LLMs for resale to organizations looking to plug in their content and data to create productivity-enhancing tools. During their second-quarter earnings calls, Amazon, Microsoft and Alphabet all pointed to increasing investment in infrastructure to power transformer AI models. Amazon Web Services Inc. (AWS), Azure and Google Cloud can turn this technology into sales on multiple levels: by reselling infrastructure to do-it-yourselfers, creating managed platforms for building and hosting customer-developed tools, and delivering prebuilt applications for pay-as-you-go consumption. LLMs also represent an important entry point for partners that providers need to meet this large-scale opportunity. As credit gets more expensive, even Big Tech will need to be prudent about capital expenditure outlays and find ways to economize to fuel this wave of investment.

Hyperscaler AI market opportunity scope ranges from infrastructure to apps

When Microsoft partner OpenAI LLC triggered the generative AI revolution in November 2022 by introducing a free version of its ChatGPT interactive bot, it opened up a world of possibilities for applying context-aware models to interactively supply information based on huge troves of content. For Azure and other hyperscale cloud providers, this presents a tremendous market opportunity at the infrastructure, platform and application levels. During their second-quarter earnings calls, AWS, Azure and Google Cloud officials all pointed to how this phenomenon will be guiding investment in the coming quarters.

Broadly speaking, the providers have been playing to type. AWS, with its appeal to "builders," has developed purpose-built silicon (Trainium and Inferentia) for the infrastructure layer; it is also reinforcing its partner assets in this space. Microsoft, with its focus on empowering "citizen developers," is stressing its offerings at the platform layer. Google, which basically grew up on machine learning and holds a patent on the transformer models underlying LLMs, has a wealth of AI-infused applications across its portfolio. All three are filling out their offerings across the spectrum.

During Amazon's second-quarter earnings call, CFO Brian Olsavsky projected "slightly more than $50 billion" in capital spending for 2023, versus $59 billion in 2022. "We expect fulfillment and transportation capex to be down year over year, partially offset by increased infrastructure capex to support growth of our AWS business, including additional investments related to generative AI and large language model efforts," Olsavsky said. Microsoft CFO Amy Hood referred to accelerating capital investment in cloud infrastructure but noted that "even with strong demand and a leadership position, [revenue] growth from our AI services will be gradual as Azure AI scales and our Copilots reach general availability dates." Alphabet CFO Ruth Porat, after noting that the largest component of capital spending in the second quarter was for servers, including "a meaningful increase in our investments in AI compute," anticipated higher spending into 2024 on technical infrastructure "to support the opportunities we see in AI across Alphabet, including investments in [graphics processing units] and proprietary [tensor processing units], as well as datacenter capacity."

The three CFOs all acknowledged that spending optimization by customers continues to influence revenue growth.

Building stakes externally

Apart from in-house efforts, it is worth noting that all three are also ramping up external generative AI investments, seeking to increase financial stake and influence across the broader ecosystem and to incentivize development on their technology stacks. Amazon in April committed $100 million in the form of a 10-week global generative AI accelerator program that included mentorship and hundreds of thousands of dollars in AWS credits. Google and Microsoft are executing primarily through their venture arms — Google Ventures and M12. Notable events include:

– Google's lead investment in a $141 million extension to text-to-video startup Runway's series C round alongside Nvidia Corp. and Salesforce Inc.

– Microsoft's lead investment in a $1.3 billion funding round for Inflection AI Inc., a company that focuses on making AI more personal, at a $4 billion valuation in June.

– Also in June, both vendors participated in a $65 million series B round for Typeface Inc., a generative AI company focused on the enterprise at a $1 billion post-money valuation.

Investment of this nature often foments dealmaking, so we would be unsurprised to see either Google and Microsoft reaching more formally into these startup portfolios as both the companies and the landscape mature.

Google Cloud operating margin moves into positive territory

In the press release announcing its second-quarter results, Alphabet revealed that CFO Ruth Porat, who has been in the role since 2005, was promoted Sept. 1 into a new role as president and chief investment officer of Alphabet and Google, overseeing Alphabet's Other Bets portfolio, including the Waymo LLC self-driving, Verily Life Sciences LLC healthcare and Access/Fiber connectivity businesses, and working more closely with policymakers and regulators — a critical function as lawmakers grapple with the potential impacts of AI.

Porat has presided over long-term investments in Google Cloud infrastructure, including datacenter expansion, that came to fruition in the first quarter, when the unit posted its first-ever operating profit, a trend that continued into the second quarter. Porat has also been guiding Alphabet's cost-cutting moves, including a 12,000-person layoff at the beginning of the year and continued optimization of the company's real estate holdings, as part of "durably reengineering our cost base to increase capacity for investments," Porat said on the second-quarter earnings call. Porat will continue as CFO, leading the company's 2024 and long-range capital planning, while Alphabet searches for a successor.

Given the profound implications of generative AI on hyperscaler portfolios — 451 Research's Market Monitor service projects 10x growth in the market to 2028 — we can expect gains to accrue to all units of the hyperscaler parent companies. In April, Alphabet announced it was consolidating its AI development efforts by merging its DeepMind subsidiary, acquired in 2014, with its Brain team into a new unit, Google DeepMind, which will be reported under Alphabet's unallocated corporate costs.

Google DeepMind's stated goal is to "solve intelligence, developing more general and capable problem-solving systems, known as artificial general intelligence." Until last year, artificial general intelligence seemed far in the future, and it may be, but if we have learned anything from the explosion of activity around generative AI, it is the capacity to be astounded by what well-trained computers can do. With DeepMind, Google is putting a stake in the ground for what might be the next AI revolution.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

451 Research is part of S&P Global Market Intelligence. For more about 451 Research, please contact.