Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — March 25, 2025

The IT infrastructure that supports workloads and applications is one of the few things that has remained constant in the technology space over time, yet it has had to adapt considerably in sophistication with major technological developments (e.g., the web and the cloud). AI and machine learning are no different, presenting a variety of new technical challenges in the infrastructure stack that buyers and suppliers have had to respond to in order to capitalize on new market opportunities. This document lays out our description of what AI infrastructure is and discusses trends we are seeing in the space lately. It also provides a brief overview of our work-in-progress research plans for the year

As demand for AI and ML technologies has evolved, so has demand for the infrastructure that underpins it. While AI and ML have been around for almost as long as IT itself, market developments over the last several years have caused an increase in demand among consumers and businesses that has extended to the infrastructure layer, as well. There has been a considerable influx of new technologies, offerings, and vendors across the compute, storage, networking, cloud and edge spaces that have entered the marketplace in response to try to capitalize on this demand.

How we view AI infrastructure

AI infrastructure is more complex than just a bunch of graphics processing units (GPUs). Generally, it is a collection of hardware and software that is deployed in support of AI and ML applications. AI infrastructure is most often equipped with GPUs and/or custom silicon, and it may be integrated with other accelerators to help process these compute-intensive workloads. AI infrastructure may also include software products used to manage, control, and orchestrate the underlying hardware and software deployed in support of these workloads. Moreover, it encompasses hardware and software products whose dedicated purpose is to accelerate AI and ML workloads. AI infrastructure can be delivered to customers via capital expenditure and operating expense models through traditional purchases and subscriptions, including in the cloud.

Other common features and traits of AI infrastructure include:

– Specific accelerated compute, storage, and networking components to process, store and move data.

– Integration with AI/ML libraries and frameworks (e.g., PyTorch and TensorFlow), data processing frameworks, and programming languages (e.g., Java and Python).

– Integration with database and data management software products.

– Several routes to market, including fully commercial, open-source and commercialized open-source offerings.

– Prepackaged and/or converged offerings from suppliers that typically ship with accelerators.

– Self-management of the infrastructure and/or the decisions around it, which distinguishes it from other high-level software and machine-learning platform offerings that have infrastructure choices made by individual suppliers. In many ways, this can be thought of as analogous to comparisons made between traditional IaaS and PaaS models.

Lay of the land

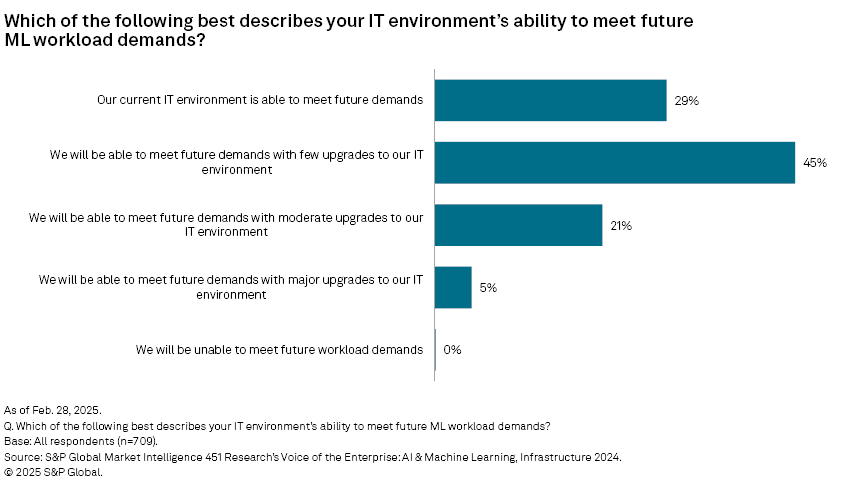

AI/ML market developments have brought a variety of new hardware and software components into the mix to support these workloads. According to our Voice of the Enterprise data, over 70% of respondents reported being inadequately prepared to handle future ML and AI workload demands in terms of the IT infrastructure that they have today.

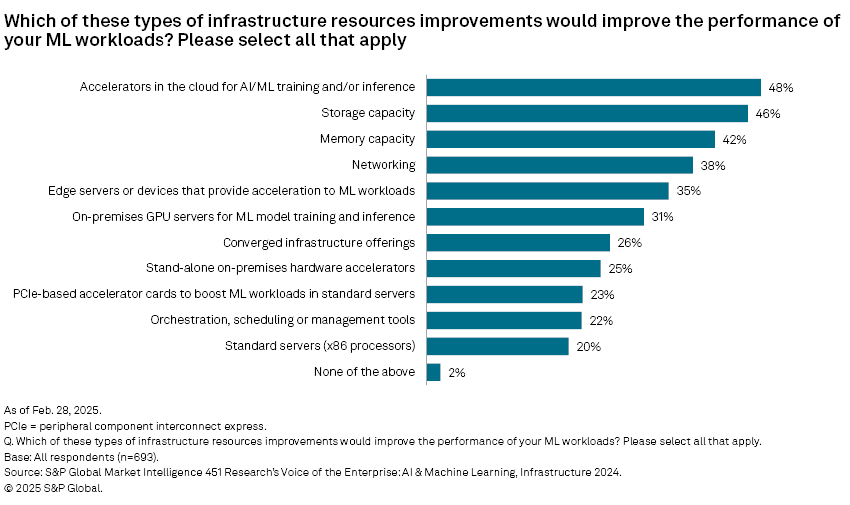

Understandably, many of these performance-related bottlenecks have to do with the availability and accessing of dedicated hardware, such as GPUs and other accelerators in the cloud, but this response alone does not tell the full story. There are a handful of other infrastructure components that were cited nearly as often, including storage capacity (46%), memory capacity (42%), networking (38%), and edge servers and devices to provide acceleration (35%).

Despite our Voice of the Enterprise: AI and Machine Learning, Infrastructure 2024 survey concluding in mid-2024, its findings track well with some of the technical developments and demands around AI infrastructure that we are seeing this year. Market inertia toward the next steps of AI implementations, including generative, inferential and agentic workloads, correlates well with the need for extremely low latency and data processing being done optimally as close to the end user as possible. This likely explains respondents' reported needs for items such as increased memory capacity, networking, and edge servers and devices.

This survey data not only suggests organizational willingness and appetite to use the cloud for their AI/ML workload computing demands, but it would also seem to indicate somewhat of a preference for cloud environments over on-premises, as evidenced by the ranking between the two choices in the figure above. This trend became even more apparent when respondents were asked to pick the single infrastructure component they felt was "most essential" to improve their ML workload performance, with the choice of cloud-based accelerators outpacing on-premises ones by 26% to 10%. Given organizations' initial sentiments around favoring on-premises environments in support of their AI workloads (and for good reasons, such as data governance, security and performance), it is a somewhat surprising finding, although not totally unheard of given most companies' cost constraints and knowing where they are in their AI road maps. In these cases, the cloud could be considered a natural on-ramp for these companies by avoiding the larger up-front investments and procurement difficulties of GPUs and other accelerated infrastructure, deployment and implementation challenges that are typically associated with on-premises environments. Keeping in mind the trends we are seeing around declining AI project success rates and increasing executive and stakeholder scrutiny of projects, it stands to reason that this customer sentiment around deploying AI workloads in the cloud as a way to lessen business-related risks will likely persist throughout 2025.

Near-term research agenda

Our research agenda intends to pick up on the aforementioned trends and representative vendors in the AI infrastructure space, with an emphasis on dynamics and changes in buyer behavior as the year progresses. AI infrastructure is an organizational priority within the broader AI/ML stack, but budget considerations will also be closely monitored, particularly in relation to increased geopolitical uncertainties and AI regulatory environments. The impact of technical developments will also be closely followed, including the potential impact of the availability of low-cost open-source models from DeepSeek on infrastructure and datacenter demand.

Additionally, we intend to refresh our Voice of the Enterprise: AI & Machine Learning Infrastructure survey in the months to come. We also plan to develop AI infrastructure market size and forecast projections as part of our Market Monitor & Forecast products.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

S&P Global Market Intelligence 451 Research is a technology research group within S&P Global Market Intelligence. For more about the group, please refer to the 451 Research overview and contact page.

Location

Products & Offerings

Segment