Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

22 Nov, 2021

U.S. lawmakers' focus on recommender algorithms in a recent pair of bills may be ineffective as legislators seek to address broader harms caused by social media platforms.

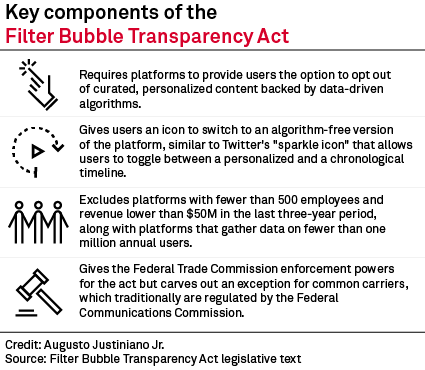

The algorithms have become the center of legislative attention, including a bipartisan, bicameral push to mandate an option for users to opt out of personalized content targeting. The move comes after leaked documents from Facebook whistleblower Frances Haugen highlighted how the platforms impact teenage girls' mental health, among other concerns, including promulgating hate speech. Recommender algorithms, automated tools that make content more personalized and social media more addictive, ultimately design what users see online.

However, switching off these algorithms could still result in users seeing troubling material as they lose out on the filtering and protection offered by machine learning. If the Filter Bubble Transparency Act passes, users could potentially spend more time online as a result of opting out, because it would take them longer to find the content they are looking for.

|

Helpful or hurtful

"I don't think this [bill] has much to do with improving the consumer experience of using these apps," said Jessica Melugin, director of the Competitive Enterprise Institute's Center for Technology & Innovation. The center is a think tank that promotes free market policies.

Documents leaked by the Facebook whistleblower detailed an experiment showing that switching off algorithms can boost the platform's usage. The company turned off its sorting algorithm for 0.5% of users' feeds, leading to longer usage times and greater ad revenue for Facebook.

"That little experiment is a good example of why going in and micromanaging how these platforms present their services is problematic for a bunch of politicians sitting in Washington, D.C.," Melugin said in an interview.

Haugen's revelations have given fresh momentum to bipartisan legislative efforts to curb social media. Reps. Ken Buck, R-Colo., and David Cicilline, D-R.I., are leading the House version of the Filter Bubble Transparency Act. Sens. John Thune, R-S.D., and Richard Blumenthal, D-Conn., have revived a similar bill first drafted in 2019 after picking up more co-sponsors. The key backers of the bills have experience advancing consensus legislation targeting Big Tech.

"Algorithms can be useful, of course, but many people simply aren't aware of just how much their experience on these platforms is being manipulated and how this manipulation can have negative emotional effects," Thune wrote in a CNN opinion column this month.

Still, even with bipartisan support, the bills will need to pass both the House and Senate, both of which are mired in debates over other high-profile issues like budget reconciliation and the debt ceiling.

First steps

While the bills could be a good first step, structural changes in social media business models are necessary to make a lasting impact on consumer well-being, said Daniel Hanley, a senior policy analyst with the pro-competitive research group Open Markets Institute.

"These platforms are designed to maximize engagement at nearly all costs," Hanley said. Social media giants such as Facebook parent Meta Platforms Inc., Snap Inc., Twitter Inc. and ByteDance's TikTok also all have the resources to innovate and find ways to capture more screen time, even with users controlling the content they see, Hanley said.

Facebook declined to comment on this specific set of bills when contacted by S&P Global Market Intelligence. It has previously said it supports efforts to bring greater transparency to algorithmic systems and to give people more control over their own user experience.

The company is also testing new tools to make it easier to find and use news feed controls to adjust people's ranking preferences and customize their news feed, among other changes, Facebook said Nov. 18.

Technical solutions

Giving users the ability to opt out of content targeting could potentially worsen the social media experience, said Mico Yuk, a business intelligence consultant and data storytelling expert. Turning off algorithms may lead to viewers seeing toxic content as there is less control over what they view, she said. By contrast, an Instagram LLC user, for instance, with default content targeting turned on can train their Explore page to recommend more positive content.

Social platforms should also better utilize natural language processing, or NLP, techniques, to remove toxic content altogether, Yuk said. NLP is a subfield of machine learning that focuses on the analysis of words and text. A common NLP practice known as sentiment analysis could be used to measure the sentiment of captions, comments and other language-based features to determine their harmfulness to users.

For Yuk, a personalized feed is preferable because users possess more control over what they deem to be "happier" content than that of an algorithm-free feed.

Financial impact

The success of algorithms in helping to drive social media use is clear from operators' usage numbers and sales. Facebook's third-quarter ad revenue rose 33% to $28.28 billion. The number of daily active users also averaged 1.93 billion in September 2021, up 6% versus a year earlier.

Facebook shares are also still up 27% year to date as of Nov. 22, even after a sell-off in the wake of the whistleblower revelations. That may suggest that investors are not yet worried about the legislation and are possibly waiting to see what the actual effect will be. It may take a while to reach a judgment; February changes in Apple Inc.'s App Tracking Transparency only really affected shares of ad-supported platforms like Facebook and Snap eight months later.

"Investors probably aren't going to get concerned until they see the bills not just signed, but also in practice," said Gene Munster, managing partner at venture capital firm Loup Ventures.