Customer Logins

Obtain the data you need to make the most informed decisions by accessing our extensive portfolio of information, analytics, and expertise. Sign in to the product or service center of your choice.

Customer Logins

BLOG

Jun 07, 2018

To D or not to D – is Annex D really a new FRTB requirement?

The long-awaited Basel Committee Consultative Document on the Fundamental Review of the Trading Book (FRTB) minimal capital requirements for market risk was finally published in March this year. Its publication brought clarity to some of the open issues that the industry has been grappling with, but also introduced new concepts that firms must now decipher.

One of these new concepts is the "Guidance for evaluating the sufficiency and accuracy of risk factors for IMA trading desk models" as portrayed in Annex D:

"…Banks must not rely solely on the number of observations to determine whether a risk factor is modellable. The accuracy of the source of the risk factor price must also be considered. In addition to the requirements specified in paragraph 183 (c), the following principles for data used in the model must be applied to determine whether a risk factor that passed the risk factor eligibility test can be modelled using the expected shortfall model or should be subject to a non-modellable risk factor (NMRF) charge."

This statement leaves several open questions: Is "Annex D" a new layer to the Risk Factor Eligibility Test (RFET), as the text above might indicate, (particularly by the statement that this is "In addition to the requirements specified in paragraph 183 (c)…")? Or, is it merely a reiteration of principles that all banks should already have in place?

Banks have given varied responses to the above questions. Some institutions read Annex D as a brand new principle that needs to be addressed; while others believe it is already adequately covered by existing risk and control governance. So, which is it?

While it's unlikely that the paper intends to add unnecessary complexity to an already complex process, it nevertheless requires banks to ensure that they have proper procedures in place. In essence, it is a reiteration of overarching data governance principles that banks should already have in place, such as BCBS239 "Principles for effective risk data aggregation and risk reporting" from 2013 or even BCBS153 "Supervisory guidance for assessing banks' financial instrument fair value practices" from 2009. Rather than a brand new principle, it's likely that banks will be expected to show more robust processes in using and connecting all available data and systems for risk management processes.

As Annex D states, the NMRF process is more than just a simple "24 real price observations (RPOs) and one-month gap count"; it also requires banks to give consideration to the accuracy of the risk factors produced. Consistency, accuracy, frequency, correlation and adequate approximation have been part of the Basel principles for several years now. Annex D outlines similar principles that should be followed to determine the accuracy of these risk factors. The principles focus on testing the accuracy of the data input into the model with some emphasis placed on model validation.

Principle 3 states that any data source used should be "representative of RPOs", that "price volatility is not understated" and that "correlations are reasonable approximations of correlations among RPOs". Principle 4 goes on to state that where the data is not an RPO, "the bank must demonstrate that the data used are reasonably representative of RPOs". This principle also outlines that the back office already serves to test front office prices through Independent Price Verification (IPV). Risk prices should also be part of this process. Principle 5 outlines the importance of updating the data with sufficient frequency: "update data at a minimum on a monthly basis, but preferably daily". Principle 6 emphasizes the need for banks to use historical time-series reflective of stress-periods for their ES calculations.

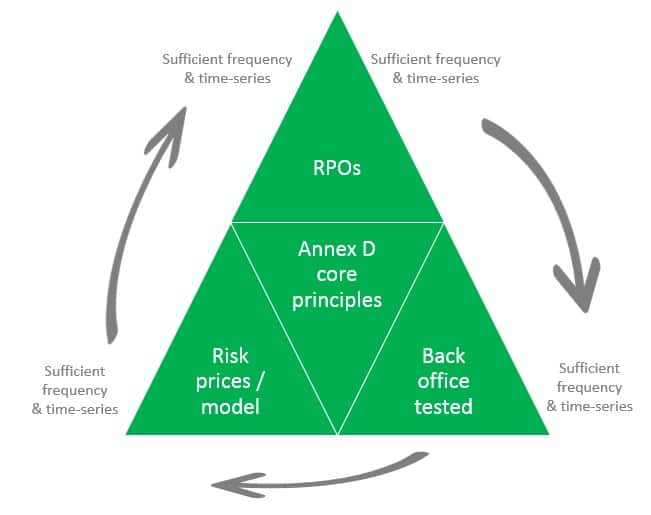

Another way to read these principles is to view the data coming from the different functions of the trading ecosystem (RPOs, consensus prices, etc.) as three corners of a triangle, which must co-exist (albeit not be identical). See figure 1 below.

From these guidelines, it is clear that the expectation is for risk prices to be tested to RPOs. The models used to generate the risks should also be calibrated to market prices and this exercise should run at a monthly frequency, although daily is recommended. In turn, IPV should be a factor in considering whether data used to generate risks is accurate. Generating risks on out-of-line prices will create out-of-line risks as second order impact of risks is not accounted for.

Figure 1: Annex D Core Principles

However, without closing the triangle by testing consensus/back-office prices to RPOs, it will be very difficult to determine whether large price differences arising from the Principle 3 and Principle 4 tests come from risk prices/models being out-of-line or from a general increase in uncertainty in the market.

By closing the triangle, a clear picture is created allowing for a distinction between out-of-line pricing and price uncertainty. This price uncertainty may be captured within other calculations (e.g. Pru-Val) and therefore accounting for it here will create duplication. Large differences between banks' own risk prices, compared to RPOs, cannot be solely explained by large differences between risk prices and consensus/back-office prices (IPV). By testing consensus/back office prices to RPOs, large differences discovered here, where there are existing large IPV differences and large difference between risk prices and RPOs, can be an indication of uncertainty in the market.

In conclusion, we believe that the principles outlined in Annex D represent best market practice for data management, rather than a brand new concept. While some banks are working under the assumption that this is a new concept and are advocating the removal of Annex D from the final FRTB text due in December, they should be ready to embrace these principles, (if they have not done so already), regardless of whether they are explicitly mentioned in the final text. After all, these principles will considerably improve data governance and reduce risk which is good for individual institutions as well as the industry as a whole.

S&P Global provides industry-leading data, software and technology platforms and managed services to tackle some of the most difficult challenges in financial markets. We help our customers better understand complicated markets, reduce risk, operate more efficiently and comply with financial regulation.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

{"items" : [

{"name":"share","enabled":true,"desc":"<strong>Share</strong>","mobdesc":"Share","options":[ {"name":"facebook","url":"https://www.facebook.com/sharer.php?u=http%3a%2f%2fwww.spglobal.com%2fmarketintelligence%2fen%2fmi%2fresearch-analysis%2fis-annex-d-really-a-new-frtb-requirement.html","enabled":true},{"name":"twitter","url":"https://twitter.com/intent/tweet?url=http%3a%2f%2fwww.spglobal.com%2fmarketintelligence%2fen%2fmi%2fresearch-analysis%2fis-annex-d-really-a-new-frtb-requirement.html&text=To+D+or+not+to+D+%e2%80%93+is+Annex+D+really+a+new+FRTB+requirement%3f+%7c+S%26P+Global+","enabled":true},{"name":"linkedin","url":"https://www.linkedin.com/sharing/share-offsite/?url=http%3a%2f%2fwww.spglobal.com%2fmarketintelligence%2fen%2fmi%2fresearch-analysis%2fis-annex-d-really-a-new-frtb-requirement.html","enabled":true},{"name":"email","url":"?subject=To D or not to D – is Annex D really a new FRTB requirement? | S&P Global &body=http%3a%2f%2fwww.spglobal.com%2fmarketintelligence%2fen%2fmi%2fresearch-analysis%2fis-annex-d-really-a-new-frtb-requirement.html","enabled":true},{"name":"whatsapp","url":"https://api.whatsapp.com/send?text=To+D+or+not+to+D+%e2%80%93+is+Annex+D+really+a+new+FRTB+requirement%3f+%7c+S%26P+Global+ http%3a%2f%2fwww.spglobal.com%2fmarketintelligence%2fen%2fmi%2fresearch-analysis%2fis-annex-d-really-a-new-frtb-requirement.html","enabled":true}]}, {"name":"rtt","enabled":true,"mobdesc":"Top"}

]}