Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — 1 Feb, 2024

By Thomas Mason

Generative artificial intelligence captivated the public in 2023, with Google Trends showing an explosion of searches for the term "ChatGPT." This was a huge leap forward for AI, but we think advances in explainable AI are just as important, if not more so, for the insurance industry. Explainable AI refers to techniques that help show, in ways humans can understand, how opaque models make predictions and decisions.

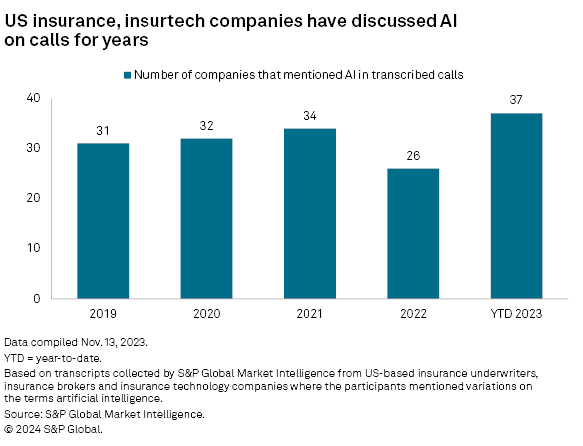

Insurance companies have been talking about AI for years, even prior to the release of ChatGPT in November 2022, based on a review of transcripts from publicly traded, US-based insurance underwriters, insurance brokers and insurance technology companies. More than 30 companies discussed the topic in each of the three years prior to 2022.

Insurtech companies should fully embrace AI and promote those efforts to investors. At the same time, the financial services industry needs advancements in explainable AI. Those will be key to alleviating regulatory concerns and should be even more useful to the industry than generative AI.

Improving customer experience through AI was a popular goal among the insurance companies we studied. As with insurance distribution itself, there are different approaches. Lemonade Inc. fields customer questions directly, with a chatbot named Maya that has answered questions in its mobile and web apps for years. In November 2023, Lemonade said it rolled out a generative AI-powered system that handles customer e-mails as well.

Conversely, some companies use AI to empower agents. Reliance Global Group Inc. said it has an AI-enabled customer relationship management system that provides "smart coaching" to agents in areas such as customer engagement and sales. On the sales and marketing front, AI is often used to segment markets and recommend products and services to customers.

Insurance companies have a multitude of AI applications for back-office operations as well. Two of the most common themes in the transcripts we analyzed were risk assessment and claims handling. The Travelers Cos. Inc. mentioned both in a July 2023 earnings call and said it uses AI to assess roof conditions, which serves as a driver of homeowners insurance losses, as well as to ingest legal complaints for claims. Those were just two of several examples the company discussed.

By sector, half of the companies in our transcript analysis were property and casualty (including title and mortgage guaranty companies). Managed care companies made up 27%, life and health accounted for 16% and the remainder were multiline.

National Association of Insurance Commissioners (NAIC) surveys found significantly less usage of AI and machine learning by life insurers relative to auto and home insurers. Based on slides from the regulator's Fall 2023 National Meeting, only 58% of life insurers surveyed used, planned to use or were exploring the use of AI and machine learning algorithms. That compares with 88% for auto insurers and 70% for home insurers.

One of the keys to the future of AI in insurance is regulation. While the aforementioned use cases probably will not invite too much scrutiny, we think state regulators will be much more wary of AI's use in policy pricing. Regulators demand detailed explanations of how actuaries arrived at their decisions, which can be very difficult, if not impossible, to do with certain cutting-edge AI models.

Neural networks, which form the foundation of the transformer technology that ChatGPT uses, are a classic example of a model where the inner workings can be opaque. They can produce extremely accurate results, but sometimes the relationships they find between data points are so complex that a human cannot determine how they arrived at their predictions.

For instance, Radian Group Inc. spoke to the challenges of AI model interpretability during a June 2023 call. While they use neural networks to analyze mortgage insurance pricing, they also dig into the model to determine equations that can explain the model's output.

Regulators could become more comfortable with neural networks. They have allowed some use of neural networks in risk assessment, approving product filings that mention third-party vendors using neural networks to model catastrophes and roof conditions. Per Actuarial Standards of Practice 38 and 56, insurers can use models outside their area of expertise if they can explain a series of model characteristics, including how it is constructed, potential risks, and the inputs and assumptions used.

But we think a more likely outcome is advancements in explainable AI. We have already seen some of these techniques working their way into product filings: Shapley values, permutation importance and partial dependence plots, to name a few. Generative AI might be part of that effort if it can explain, in human language, how complex statistical models form conclusions. At the very least, we think generative AI applications should cite the sources of their information.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.