Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — 10 Apr, 2024

The stars of the generative artificial intelligence world so far, we would argue, are the foundation model providers. They are the ones that first wowed us with what GenAI could do, got the attention of the media and politicians alike, and then — in the case of OpenAI — descended into corporate chaos worthy of its own docudrama, which we assume is already in production.

While the revenue generated by many of these foundation model companies in less than two years has been incredible, it is prudent to ask whether they will be the ultimate winners in this space, or just a passing fad on the way to eventual dominance by hyperscale cloud vendors and chipmakers.

We doubt that foundation models alone are a sustainable business model because the cost of training and maintaining the models is too expensive. While price per token via APIs will gradually decrease, the volume of increased demand likely will not be sufficient to offset the price per access reduction. The availability of viable open-source models as alternatives will also put pressure on margins. Plus, enterprises want more than just a model trained on large chunks of the public web — they want models tailored to their business needs, and they also want applications that can provide direct business benefit. The models are the engine, but enterprises want to buy a car.

Model businesses

There are myriad companies participating in the overall GenAI market: S&P Global Market Intelligence 451 Research's most recent Generative AI Market Monitor included 327 companies, and a forthcoming update has more than 400. But there are relatively few companies solely focused on building foundation models.

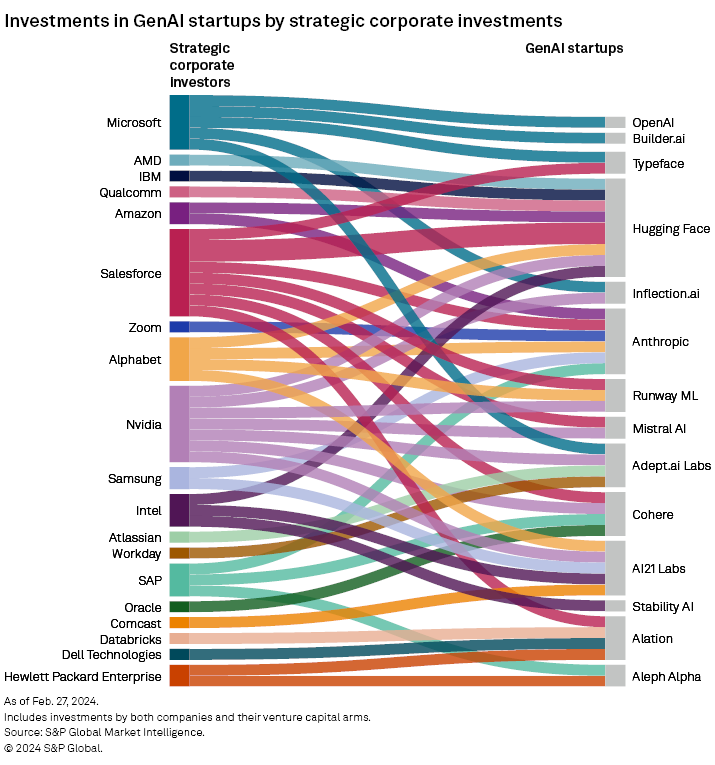

Looking at nine of the top pure-play foundation model companies from our forecast — AI21 Labs, Aleph Alpha, Anthropic, Cohere AI, Hugging Face, Inflection AI, Mistral AI, OpenAI LLC and Stability AI — there has been no shortage of strategic and other investors that want a piece of them. Together, those nine companies have raised $28.67 billion in disclosed rounds, according to S&P Global Market Intelligence. Rounds to come could more than triple or quadruple that number.

The market at the moment includes these pure-play (or close to it) companies plus the hyperscale cloud vendors, large technology vendors such as International Business Machines Corp., and social media firms like Meta Platforms Inc. (which open-sources its models). Then there are hundreds of specialists with tools to generate text, images, audio, video, code, synthetic structured data and other data types.

A lot of money is changing hands between investors and vendors, and between software companies and infrastructure vendors. We recently looked in detail at the role of strategic investors in GenAI specialists. There are good reasons that they want to be part of the action and are not leaving it to traditional investors.

First, strategic investors anticipate an exit via IPO or acquisition. Even if the startup foundation model companies fail, if you are a cloud vendor and the startup is a customer, at least you are generating revenue from them while they are around. Second, tech M&A is coming under increasing scrutiny from antitrust regulators in the US and Europe. The strategics are less confident they can acquire these companies outright, so a few tens of millions of dollars is worth risking to stay part of what could be a heady ride.

The money that foundation model companies are accepting is expensive. Startups usually have lower valuations when they first look to raise money because many are pre-revenue, and thus have to offer larger chunks of their business to raise required capital. So where is it going? A lot is going to the major cloud hyperscale vendors — Amazon.com Inc.'s AWS, Alphabet Inc.'s Google and Microsoft Corp., which spent as much on capital expenditures in its second quarter of fiscal 2024 ending Dec. 31, 2023, as it did its entire fiscal year of 2018.

Capital is also going to NVIDIA Corp., whose incredible fiscal fourth quarter of 2024 numbers announced Feb. 21 drove a $277 billion increase in its market capitalization the following day — more than the value of The Coca-Cola Co. at the time. These investments have been mainly to build out infrastructure for training models, but as models become more performant with every release from the major vendors (and probably cost more to train each time), it is worth contemplating what else is required to build an effective GenAI-driven application.

Foundations are one thing; applications are another

Although the fascination with ever-improving models is interesting, it is not what will ultimately determine success in GenAI. That is because the model is not the application, meaning that many other elements need to be wrapped around a model to enable it to solve complex business problems. We have seen the rise of retrieval augmented generation (RAG) as one way of supplementing what a foundation model can bring without having to fine-tune the model. Retrieval augmented generation often leverages vector databases that store, index and process high-dimensional vectors (the numerical representations of unstructured data such as text, images, audio and video).

However, that search lookup process needs to perform well so as not to slow everything down and ruin the user experience. There are already proponents of so-called RAG 2.0 advocating for better precision and recall, with RAG searching to take that technique further still. Then there are chaining methods whereby multiple prompts are connected to generate complex content by breaking down a large generative task into smaller, more manageable parts. This involves using the output of one prompt as the input for the subsequent prompt, then repeating this sequence until the desired content is generated.

If the retriever is tuned for one model, then that is fine, but complexities can occur when a retrieval engine is working across many different models and different modalities (text, image, audio). Different models and modalities put different pressures on infrastructure. While this report is not focused on the underlying infrastructure of chips, networking, storage or memory, it is a factor in this middle layer of the GenAI stack.

The field of machine learning operations (MLOps) is expanding into what we are calling generative AI operations (GenAIOps). The term large language model operations (LLMOps) is too narrow for this developing field, given that LLMOps only applies to a single modality of models, namely language. The increased complexities that GenAIOps must address include the novel security risks posed by GenAI. Here, you cannot control the inputs, and have less control over outputs than in traditional narrow predictive models, plus there are the broader data-related challenges posed by the use of RAG.

So beyond the hysteria whipped up by the race to have ever larger and more performant models, which attract not only headlines but huge amounts of investments, there are plenty of other factors to consider before those expensively assembled models can deliver real business value.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

451 Research is a technology research group within S&P Global Market Intelligence. For more about the group, please refer to the 451 Research overview and contact page.