Customer Logins

Obtain the data you need to make the most informed decisions by accessing our extensive portfolio of information, analytics, and expertise. Sign in to the product or service center of your choice.

Customer Logins

BLOG

Oct 29, 2018

FRTB Decision-Making: The Data Quality Paradigm

The idiomatic phrase "Garbage In, Garbage Out" still rings true 60 years after it was first coined. It has expanded from the world of computer science to encompass the wider remit of decision-making and the poor decisions made due to inaccurate, incomplete or inconsistent data. It is a concept that goes right to the heart of the main challenges associated with the upcoming Fundamental Review of the Trading Book (FRTB) requirements.

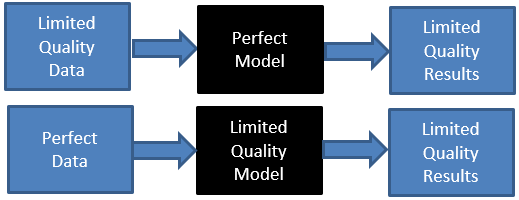

When we talk about "data quality" in the context of the decision-making process, is it just about having access to good quality data sources or is it also about how data is processed? As Ronald Coase says: "If you torture the data long enough, it will confess"; in other words, bad data processed by the "proper" model may result in an answer, but it won't necessarily be a very reliable answer or indeed the answer expected.

Another way to look at this "data quality paradigm" is as follows:

Bill Coen, Secretary General of the Basel Committee on Banking Supervision (BCBS), recognized the data quality issue in the context of FRTB during the ISDA conference in April 2018 when he said:

".… The Committee has conducted many quantitative exercises on market risk, both before and after the publication of the market risk framework, with a QIS exercise currently under way. While the quality of data submitted by banks has improved over time, data quality concerns remain. Thus, a significant proportion of banks' data has been excluded from the Committee's analysis. … as a result, the Committee has in some areas been left with a small sample of observations to finalise certain outstanding revisions. ….".

When Coen (and others) talk about data in the FRTB context and more specifically in the context of non-modellable risk factors (NMRFs), they most likely mean Real Price Observation (RPO) data. However, RPOs alone do not portray the full picture. Through the omission of reference data and relevant market data, an incomplete data set is created. Enrichment of the transactional data is therefore vital to enable better classification and enhance accuracy, which in turn unlocks meaningful modellability test results.

And what did Coen mean when he mentioned quality concerns? Data quality has multiple dimensions: accuracy, completeness, consistency, uniqueness, timeliness, staleness and conformance. It is most likely that the BCBS encountered a myriad of quality issues likely touching upon most of the dimensions mentioned above, reinforcing the point that quality is never a one-dimensional definition.

It is therefore not surprising that the BCBS had to exclude "a significant proportion of banks' data" when conducting quantitative exercises on market risk. Clearing data is a good example of just one element of the data reporting conundrum. It is no secret that there is no standard for the reporting of clearing data. This has a knock-on effect on downstream reporting and probably explains why the BCBS struggled to make sense of the data reported by the banks. It is just one manifestation of the data aggregation and reporting issues within the banking system, identified by regulators in the post-crisis era.

For some use cases which require higher level reporting or trend analysis, clearing and trade repository data can be of great value. However, the strict modellability criteria for FRTB (the 24 RPO count and one-month gap or whatever the rules stipulate in the final text) and the clear requirement to provide evidence to supervisors cannot be based on subjective assumptions; both the input data and the data models must be robust and traceable.

So, by putting data and quality back together in the context of FRTB/NMRF, the solution for the NMRF challenge should not just be a measurement of RPO count but a more holistic data governance approach covering the following areas:

- RPO coverage from the perspective of unique underlying assets as well as total count;

- Reference data comprehensiveness and accuracy of the pricing data used to enrich and better classify the RPO data;

- A data model to validate and normalize the data as well as deal with events such as errors in deal capture (fat finger), early terminations (partial or full) etc.;

- Data dictionary / transaction taxonomy to ensure consistency, uniqueness and completeness of the data to allow accurate mapping of the RPOs to risk factors;

- Committed Quotes: Are RPOs, both pre and post, treated homogeneously or are committed quotes being treated differently;

- Timeliness of the service: Is it an EOD service or does it take T+2/3 to reconcile and process the data?

- Is the pool of RPOs based on inventory or single evidence of trade?

The pursuit of good quality data is an ongoing struggle for most banks; this pain is amplified tenfold when trying to pool and amalgamate multiple sources (such as bank submissions, exchanges and trade repositories). Banks realize that relying on just their own trading activity can only take them so far, leaving them with a large universe of NMRFs. The BCBS acknowledged as much in its March 2018 Consultative Document by emphasizing the importance of pooling in mitigating the NMRF challenge. Participating in a pooling scheme is no longer a nice-to-have but a must-have. Therefore, banks requiring third party data to help them with their NMRF challenge must look not only for "good data" but also for good data models and processes as they have become ever more paramount.

In conclusion, with the final FRTB text due to be published shortly, banks are now re-energizing their FRTB programs and starting to work in earnest on their IMA plans. This will require them to make countless decisions and in this decision-making process, the scarcest commodity will be good quality data. The data quality paradigm of Garbage In, Garbage Out will force banks to make the acquisition and processing of reliable data a top priority. And time is short: too long a delay and the paradigm will mutate and become "Garbage data in, Garbage decisions out".

S&P Global provides industry-leading data, software and technology platforms and managed services to tackle some of the most difficult challenges in financial markets. We help our customers better understand complicated markets, reduce risk, operate more efficiently and comply with financial regulation.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

{"items" : [

{"name":"share","enabled":true,"desc":"<strong>Share</strong>","mobdesc":"Share","options":[ {"name":"facebook","url":"https://www.facebook.com/sharer.php?u=http%3a%2f%2fwww.spglobal.com%2fmarketintelligence%2fen%2fmi%2fresearch-analysis%2ffrtb-decision-making-data-quality-paradigm.html","enabled":true},{"name":"twitter","url":"https://twitter.com/intent/tweet?url=http%3a%2f%2fwww.spglobal.com%2fmarketintelligence%2fen%2fmi%2fresearch-analysis%2ffrtb-decision-making-data-quality-paradigm.html&text=FRTB+Decision-Making%3a+The+Data+Quality+Paradigm+%7c+S%26P+Global+","enabled":true},{"name":"linkedin","url":"https://www.linkedin.com/sharing/share-offsite/?url=http%3a%2f%2fwww.spglobal.com%2fmarketintelligence%2fen%2fmi%2fresearch-analysis%2ffrtb-decision-making-data-quality-paradigm.html","enabled":true},{"name":"email","url":"?subject=FRTB Decision-Making: The Data Quality Paradigm | S&P Global &body=http%3a%2f%2fwww.spglobal.com%2fmarketintelligence%2fen%2fmi%2fresearch-analysis%2ffrtb-decision-making-data-quality-paradigm.html","enabled":true},{"name":"whatsapp","url":"https://api.whatsapp.com/send?text=FRTB+Decision-Making%3a+The+Data+Quality+Paradigm+%7c+S%26P+Global+ http%3a%2f%2fwww.spglobal.com%2fmarketintelligence%2fen%2fmi%2fresearch-analysis%2ffrtb-decision-making-data-quality-paradigm.html","enabled":true}]}, {"name":"rtt","enabled":true,"mobdesc":"Top"}

]}