Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — 13 Dec, 2023

Highlights

One year after ChatGPT's launch, there is greater focus on the tensions between AI monetization and safety. The recent drama at OpenAI highlights the need for regulation.

While this edition of this digest was likely to be anchored by OpenAI LLC as the month contains the first anniversary of ChatGPT's launch, we had not anticipated the chaos at OpenAI. Within 48 hours (Nov. 17–19), OpenAI CEO Sam Altman was fired, President Greg Brockman quit in sympathy, other key staff resigned in protest, and then Altman and Brockman were hired by Microsoft Corp. to start a new advanced AI research unit. Altman returned after a new board agreed to reinstate him "in principle" before everyone took a breather for Thanksgiving. The events will likely change OpenAI and its relationship with Microsoft, in addition to putting AI regulations at the front of everybody's minds.

With OpenAI and Microsoft making several announcements at OpenAI DevDay and Microsoft Ignite, it is unsurprising that they dominated technology commentary over the past few weeks. The nature of those headlines was less expected, with the firing of Altman and his reinstatement exposing frictions within the generative AI space. The media spotlight quickly shifted from new releases to the tensions between monetization and safety, and the roles that startups and established technology providers play in the space. While many have paid lip service to concerns about the safety of AI, few were aware of how obsessed some within the AI community were about the potential for catastrophe. There now seem to be clear lines between so-called AI "doomers" and "boomers," or "decelerationists" and "accelerationists." The episode shed even more light on the need for regulation. Having the safety of humanity in the hands of four people (as they saw it) on a board of directors now looks absurd. President Joe Biden's Executive Order, the EU's AI Act and the UK's AI Safety Summit now look well timed — and it is still only one year since the launch of ChatGPT.

Product releases, updates

|

Microsoft made a number of announcements at its Ignite conference, including new capabilities within Azure Machine Learning such as large language model (LLM) workflow development capabilities, a datastore known as OneLake and an expanded model catalog. The model catalog is expanding to include models available on Hugging Face, which includes Stability AI's Stable Diffusion models. Microsoft also added Meta Platforms Inc.'s Llama2 and CodeLlama models to its catalog and cited models from Mistral and Cohere that would be available in its new Models as a Service offering. Expanding available models is an important update for Microsoft; the company's generative AI offering had been largely anchored to OpenAI models. Furthermore, the move shapes the direction of Azure AI Studio, a generative AI development hub set to address an array of prebuilt and customizable AI models.

Azure AI Studio is positioned as a unified platform for building generative AI capabilities. Developers can use the platform to build, test and deploy prebuilt and customized AI models with tooling that can support fine-tuning, evaluation, multimodal capabilities and prompt flow orchestration. The business also announced its move into the silicon space — with Microsoft Azure Maia and Microsoft Azure Cobalt — and updates related to Microsoft Copilot. These included embedding Security Copilot across the Microsoft Security Portfolio as well as a studio development environment so organizations can develop their own Copilot applications.

GitHub Universe 2023 also contained a number of announcements related to generative AI, packaged with the statement, "Just as GitHub was founded on Git, today we are re-founded on Copilot." General availability of GitHub Copilot was announced for December, alongside a note that GitHub Copilot Chat would be integrated directly into github.com. Copilot Enterprise, which offers fine-tuned models, code-review capabilities and document search, will be made available from February 2024.

OpenAI made a number of announcements at its DevDay. Most attention was paid to the company's GPT-4 Turbo model, a significantly cheaper model to run than GPT-4, and a new Assistants API designed to help developers looking to incorporate AI assistants into their own applications. Customization was an important theme for OpenAI, with the company profiling tailored "GPTs" and launching code-free tooling to develop them. Users will be able to make these versions available through a GPT Store. Aligned with this customization drive, the company is opening an access program for GPT-4 fine-tuning. With OpenAI's Custom Models program, organizations can apply to develop a custom GPT-4 designed for a specific domain. Fine-tuning has historically been restricted to GPT 3.5, which has limited the customizability of OpenAI's models.

With the claim that "ChatGPT can see, hear and speak," OpenAI also rolled out vision capabilities for GPT-4 Turbo and a new text-to-speech model. In October it launched DALL·E 3 in ChatGPT Plus and Enterprise. At DevDay, OpenAI suggested DALL·E 3 would also be available via its Images API. Microsoft also announced the availability of DALL·E 3 within Azure OpenAI Service at Ignite. These updates have been somewhat overshadowed by personnel challenges.

International Business Machines Corp. unveiled watsonx.governance to enable organizations to build, train, deploy and now govern generative and traditional AI models. Large language models and other types of foundation models pose unique challenges in terms of security and governance, and IBM's watsonx.governance, generally available in December, aims to tackle some of these issues. These include the ability to detect when thresholds for quality metrics and drift are exceeded for inputs and outputs of an LLM, including the detection of toxic language and personal identifiable information. Watsonx.governance also captures facts about the models throughout the development process, which can be used to enable organizations to manage their risk based on their own tolerance levels of bias and model drift. It can aid adherence with early AI regulation including the upcoming EU AI Act. The product currently works on models hosted in IBM Cloud only, but the road map includes integrations to hyperscale cloud vendors, the ability to manage and monitor third-party models, and an on-premises version in the first quarter of 2024.

IBM also announced the availability of watsonx Code Assistant for Red Hat Ansible Lightspeed and watsonx Code Assistant for Z in late October. These generative-AI-powered assistants have been built up from IBM's Granite foundation models, specifically the company's CodeLLM model, which has been tuned to underpin the company's Ansible and application-modernization offerings. Code Assistant for Z is designed to understand an application, automate refactoring and support users looking to transform COBOL code to Java.

OctoML, an AI-optimization startup, launched OctoAI Image Gen in November. The offering includes Stable Diffusion API endpoints and SDKs, and is designed to improve inference speed and customizability. OctoML positions its overall offering as helping businesses run, tune and scale generative AI, and the company's Image Gen represents its first packaged stack offering.

Snowflake Inc. announced Snowflake Cortex, a managed service that can aid the development of LLM apps. LLM functions in private preview through the service include translation, text summarization, sentiment detection and answer extraction. Nongenerative models available as serverless functions through the service will include forecasting, data classification and anomaly detection. The company also has Snowflake Copilot in private preview, which supports SQL generation from natural language.

Salesforce Inc. made an array of generative AI capabilities generally available in October. Within its Sales Cloud offering, "sales summaries" were made generally available, to provide sales professionals with key insight on opportunities or accounts. Other capabilities include new content generation features in Marketing Cloud, a conversation catch-up capability in Service Cloud and a product descriptor generator in Commerce Cloud. Several capabilities were also released in pilot phase, including insight summaries within Salesforce's Analytics offering, knowledge search within Service Cloud and a survey generator.

Startup xAI released its first AI model, Grok. Founded by Elon Musk in March 2023, xAI is closely aligned with another technology company owned by Musk, X, the social media platform formerly known as Twitter. One of the differentiators xAI set out for its model was its "real-time knowledge" pulled from the X platform. Musk suggested the model will be made available to X's "premium+" subscribers, the highest of three pricing tiers for its subscription service.

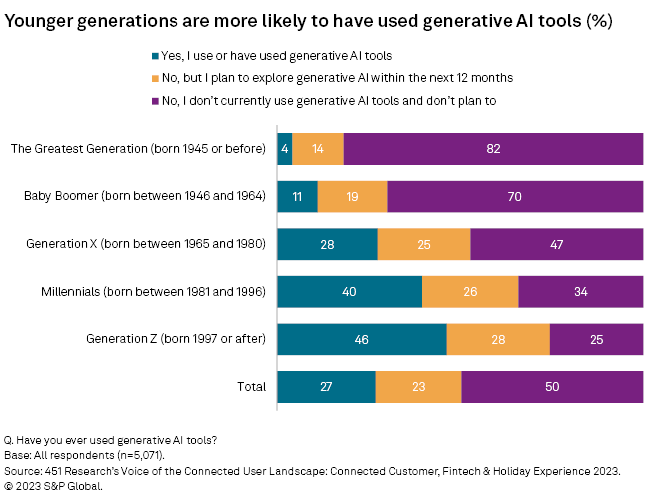

Google announced it would allow US teenagers to use its Search Generative Experience (SGE), a service that had been previously restricted to over 18s. These users can sign up for SGE through Search Labs — a program that allows users to experiment with new capabilities. The company suggests it has additional safeguards, including around illegal activity and age-gated content. As the chart illustrates, younger generations are more likely to have used generative AI capabilities, and Google notes that its highest feedback scores from generative search from its existing user cohort came from users aged 18-24. With the availability of generative search to a younger audience, this cohort could expand to younger individuals.

German camera manufacturer Leica launched the M11-P camera — the world's first camera with Content Credentials built in. Content Credentials is a secure metadata protocol developed by the Content Authenticity Initiative, which Adobe Inc. co-founded in 2019, along with Getty Images Holdings Inc., Leica, Microsoft and Reuters. Every image taken using the M11-P includes standard metadata about time and location, and adds who took the photo and how, adding a digital signature, so content creators can get credit for their work and maintain a greater degree of control.

Funding and M&A

Foundation model provider Aleph Alpha raised $500 million in a series B round co-led by Schwarz Group; Innovation Park Artificial Intelligence, an AI hub based in Germany; and Robert Bosch Venture Capital. Other participants in this round included Christ&Company Consulting, Hewlett Packard Enterprise Co. and Burda Principal Investments. Existing investor SAP SE also participated. It brings the total raised by Aleph Alpha to $533.6 million.

AI21 Labs announced a $53 million extension to build on its already sizeable $155 million series C round. Notable investors in the round include NVIDIA Corp., Intel Capital and Alphabet Inc. The company is best known for its Jurassic-2 foundation models, as well as developing task-specific models tied to industries and use cases.

Stability AI also received a $50 million round of debt financing led by Intel Corp. According to S&P Capital IQ Pro, this brings the company's total funding to $201 million, of which $95 million is debt — the last three rounds in fact. Stability AI has models for audio, image, language and video generation, but the most prominent project the company is associated with is Stable Diffusion, a text-to-image generator.

Politics and regulations

The UK government's AI Safety Summit was held at Bletchley Park on Nov. 1 and 2. It resulted in the "Bletchley Declaration," signed by representatives of 28 countries, which concluded that AI poses significant risks as well as opportunities. Signatories included China and the US, whose representatives participated in an on-stage discussion. The parties agreed to meet every six months, with the next meeting hosted by South Korea, followed by France. The summit also resulted in Yoshua Bengio, one of the pioneers of deep learning and someone who has expressed strong concerns about the potential negative aspects of AI since ChatGPT emerged, being chosen to lead a "State of the Science" report to be published at the next AI Safety Summit. The event concluded with Prime Minister Rishi Sunak interviewing Elon Musk, which we presume won't be something other heads of states will be doing when they host the summit.

A letter arguing for more "open" AI development has gained traction, with prominent signatories across academia and the AI industry. Hosted by Mozilla, the letter takes aim at the "idea that tight and proprietary control of foundational AI models is the only path to protecting us from society-scale harm," and is designed to address concerns that regulation might lead to a concentrated and closed market. The letter suggests little in terms of policy items, but stresses the need for lower barriers to entry for new players and a commitment to open-source and open-science development.

451 Research is a technology research group within S&P Global Market Intelligence. For more about 451 Research, please contact 451ClientServices@spglobal.com.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.