Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

S&P Global Offerings

Featured Topics

Featured Products

Events

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Financial and Market intelligence

Fundamental & Alternative Datasets

Government & Defense

Professional Services

Banking & Capital Markets

Economy & Finance

Energy Transition & Sustainability

Technology & Innovation

Podcasts & Newsletters

Research — Feb 18, 2025

By Alexander Johnston

The high performance and low cost of Hangzhou DeepSeek Artificial Intelligence Co. Ltd.'s V3 and R1 models have ignited the market, challenging long-standing assumptions. We examine whether these releases fundamentally reshape the paradigm, or represent a continuation of existing trends.

While DeepSeek does help disrupt the narrative underpinning generative AI discussions, its recent releases have too rapidly evolved into a new one. The releases are best read as a contribution to longer discussions around open- versus closed-source models, the long-term viability of specialist foundation model providers, and how much AI infrastructure will actually be required. From an infrastructure perspective, these developments should at minimum give both buyers and suppliers pause to evaluate their current strategies while potentially opening the door to broader democratization within the market.

Context

A strong narrative has underpinned generative AI discussions since the popularization of the technology in late 2022, with the release of ChatGPT. This narrative has driven the investment strategies undertaken by companies and governments, and framed AI decision-making. It is built on interrelated pillars. The first is that the US would take a leading role in generative AI, and its policymakers have taken steps to ensure the US will maintain its position. The second is that ever-larger, more resource-hungry, models would define the future of AI. The third is that GPU scarcity would continue to define markets, and that major AI infrastructure investments would be required. The fourth is that only a small cohort of organizations would have the financial muscle to build frontier models, and that there would be commercial viability in building closed-source proprietary models. Few of these pillars were structurally sound, and DeepSeek has helped further undermine some of these narrative foundations. As this narrative has powered the waves of investment many organizations and investors have made, it is perhaps unsurprising that the market has been shaken.

DeepSeek was founded by Liang Wenfeng — the co-founder of quantitative hedge fund High-Flyer — in May 2023. Operating as an independent AI research lab under High-Flyer, DeepSeek initially gained attention in Europe and the US after the launch of a code generation model in November 2023. This release was followed by a series of large language models (LLMs). The release of DeepSeek V2 in May 2024, along with a related code generation variant, garnered attention for its innovative architectural design. This design featured a cache-compression technique that accelerated text generation and reduced training costs through an efficient mixture-of-experts approach.

DeepSeek's follow-up model, V3 — a 671-parameter LLM released in December 2024 — had a delayed but market-altering response. With the release of reasoning model R1 in January, and the subsequent popularity of the DeepSeek mobile app in the US, investors became more aware of the major efficiency opportunity associated with V3. The open-source model performs well against frontier language models across an array of benchmarks, but the most notable aspect to onlookers is how cost- and resource-efficiently the models were trained. V3 was reportedly trained on a cluster equipped with just 2,048 NVIDIA H800 GPUs, at a fraction of the cost and compute of other foundation models.

What is DeepSeek doing differently architecturally?

DeepSeek has consistently prioritized efficiency, achieving significant optimization gains in both its V2 and V3 model releases. With V3, DeepSeek introduced additional innovations, including a new strategy for optimized load balancing. Load balancing is a critical issue in mixture-of-experts frameworks, which many AI developers use to enhance efficiency and scale large models. This balancing refers to efficiently distributing tasks among experts. By overcoming associated communication bottlenecks, DeepSeek significantly improved model training efficiency.

DeepSeek R1 also has notable differentiating characteristics. It is the first major open-source model to leverage reinforcement learning without supervised fine-tuning, augmented by a foundational level of understanding through a minimally labeled dataset. Reinforcement learning allows an AI to optimize its actions by iteratively receiving feedback in the form of rewards or penalties. This approach could have an impact on reducing the reliance on human-labeled data. By removing the supervised fine-tuning step — and consequently tasks like annotation — it should make it easier for models to iteratively improve.

When considering the architectural approaches and their impact on training costs, it is important to note that the DeepSeek V3 paper only cited the cost of the final training run. This excludes costs associated with talent, research and experimentation. Perhaps more importantly, the inferencing costs are significantly lower for DeepSeek's R1 API compared with OpenAI LLC's o1 reasoning model. At the time of writing, the token input costs for R1, if the request is not addressed by contextual caching, is $0.55 for 1 million tokens. The output costs are $2.19 per 1 million output tokens. In comparison with o1 for text, it is $15 and $60 respectively. These cost advantages could widen the tasks organizations are able to deploy reasoning models to address, and for software vendors significantly increases the viability of integrating generative AI without disrupting their commercial model. These advantages are particularly key because, according to 451 Research's Voice of the Enterprise: AI & Machine Learning, Use Cases 2025, cost has emerged as the leading decision-making factor for AI project prioritization.

The geopolitical implications of DeepSeek

The representation that DeepSeek's V3 release represents a sudden rebalancing in global AI leadership is misplaced, in part because it neglects a longer-term research acceleration by Chinese labs. Chinese startups had already made major strides in key areas, with high-quality video models an illustratable area of development. Kuaishou Technology's Kling, ZhiPu AI's CogVideoX, Tencent Holdings Ltd.'s Hunyuan, MiniMax's Hailuo and Beijing Shengshu Technology Co. Ltd.'s Vidu all display impressive AI research capabilities in China. Indeed, within days of DeepSeek's R1 announcement, Alibaba Group Holding Ltd. announced the API of Qwen2.5-Max was available in Alibaba Cloud, accessible via the application Qwen Chat, and cited higher performance than R1 across notable benchmarks.

DeepSeek's V1 and V2 releases lagged the top US performers on the Chatbot Arena leaderboard, a popular crowdsourced user preference benchmark. However, both V3 and R1 are now top-10 performers, with R1 currently ranking fourth. DeepSeek claims that R1 delivers performance comparable to OpenAI's o1 across various mathematics, programming and general knowledge benchmarks, with differences largely within a percentage point. While this performance is particularly impressive for an open-source model, what has largely captured attention are these benchmarks in the context of the cost-effectiveness of DeepSeek's training process.

In terms of accuracy and capability, neither DeepSeek V3 nor R1 represents a new frontier. While leading proprietary AI developers like OpenAI and Google have delivered large multimodal models, both DeepSeek V3 and R1 work exclusively with text inputs and outputs. However, it is important to recognize that DeepSeek has released research on multimodal models and recently introduced Janus Pro, a low-cost text and image model.

Significant comparisons are being made between R1 and OpenAI's o1, which was released in preview in September 2024. This time lag also needs to be understood in the context of the lag between the research advancements of leading US model providers and what models are made publicly available. Additionally, some of the excitement about DeepSeek's capabilities from its user community may stem from the fact that many users were utilizing free public-facing offerings, rather than the paid tiers that provide access to higher-performance models. For example, users wanting to take advantage of OpenAI's reasoning models need to upgrade to a paid tier, which may have contributed to the perception that DeepSeek is eclipsing proprietary closed-source competitors.

Several US companies have been keen to underplay DeepSeek's advancements, with accusations of data theft or providing misleading information. Microsoft Corp. is reportedly investigating whether DeepSeek used OpenAI's technology as part of its approach to model distillation — a claim repeated by new AI and crypto czar David Sacks. Some figures in the US have made allegations that DeepSeek is using more, and higher-performance, GPUs than it claims for training. These claims have also been made by the CEO of Scale AI and Elon Musk. It is well-known that High-Flyer has established sizeable A100 clusters, although allegations that DeepSeek has access to high volumes of H100s are far more questionable. The open-source nature of DeepSeek's technology and research, as well as the company's long-term efficiency focus, adds significant credibility to its training claims. Notably some prominent generative AI US services — Perplexity AI Inc. and Microsoft among them — have already decided to support DeepSeek models.

The implication of DeepSeek for model development

DeepSeek's low training costs suggest that the viability of smaller companies establishing their own foundation models is higher than initially considered. Sam Altman of OpenAI, receiving questions at an event in India in 2023, suggested that it would be "hopeless" for a team with $10 million to compete with OpenAI in "training foundation models." This appeared to be accurate. Some prominent startups that had attempted to compete in foundation model development — Character Technologies Inc., Aleph Alpha GmbH and Inflection AI Inc. are among the most notable — had to redirect efforts as their ability to compete on a cost and resource basis with the leading players had proven too challenging.

The competitive moat for OpenAI and other closed-source model providers, such as Anthropic, has become increasingly questionable with the rise of high-performing open-source alternatives. DeepSeek, being open source and permissively licensed, is highly accessible, even compared with some nominally "open" competitors. The recent emergence from the stealth of Oumi AI, an open-source build-your-own-foundation-model provider, is an indicator of DeepSeek's contribution to the proprietary versus open-source debate.

The opportunity posed by open-source models is not new. The improving performance of open-source AI models against AI benchmarks has already set the stage for renewed inquiry. Not every application requires the highest level of accuracy or massive reasoning models: Releases like Meta Platforms Inc.'s Llama 3.3, Mistral AI SAS's Nemo and PHI-4 have already generated interest among organizations seeking on-device applications or greater control and transparency.

The implications of DeepSeek on GPU scarcity

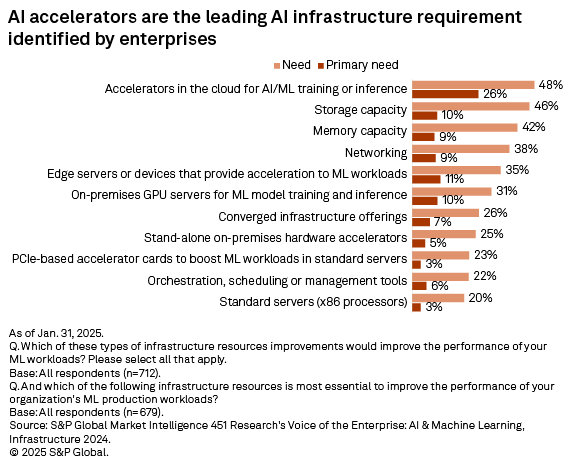

Organizations and AI developers have been keenly aware of GPU scarcity challenges. Just 29% of respondents to 451 Research's Voice of the Enterprise: AI & Machine Learning, Infrastructure 2024 perceived their organization's IT infrastructure as being able to meet future AI workload demands without upgrades. Common challenges with ML initiatives attributed to infrastructure included keeping pace with the latest AI advancements (37%) and model performance (36%). In particular, there was a keen awareness of the need for AI accelerators.

The suggestion that DeepSeek's efficiencies could reduce the demand for high-performance GPUs significantly impacted NVIDIA Corp.'s share price. However, it is possible that any democratization of AI due to lower costs or increased deployment flexibility may actually increase the need for GPUs for inference tasks. Most large language models indeed require a GPU for effective inference, particularly models with over 20 billion parameters, such as V3, which activates 37 billion parameters at a time. Therefore, the advancements made by DeepSeek should not be seen as diminishing demand, although it may change the mix of GPUs required. In fact, some service disruptions experienced by DeepSeek's AI assistant — although attributed by the company to "large-scale malicious attacks" — may potentially indicate infrastructure limitations.

This article was published by S&P Global Market Intelligence and not by S&P Global Ratings, which is a separately managed division of S&P Global.

451 Research is a technology research group within S&P Global Market Intelligence. For more about the group, please refer to the 451 Research overview and contact page.

Location

Products & Offerings

Segment